Is In-Context Learning Feasible for HPC Performance Autotuning?

Quick Links

Media

- Self-Hosted Publication

© Thomas Randall 2025. This is the author’s version of the work. It is posted here for your personal use. Not for redistribution.

Publication Details

Appears in HPC for AI Foundation Models & LLMs for Science 2025 by authors: Thomas Randall, Akhilesh Bondapalli, Rong Ge, and Prasanna Balaprakash

Abstract

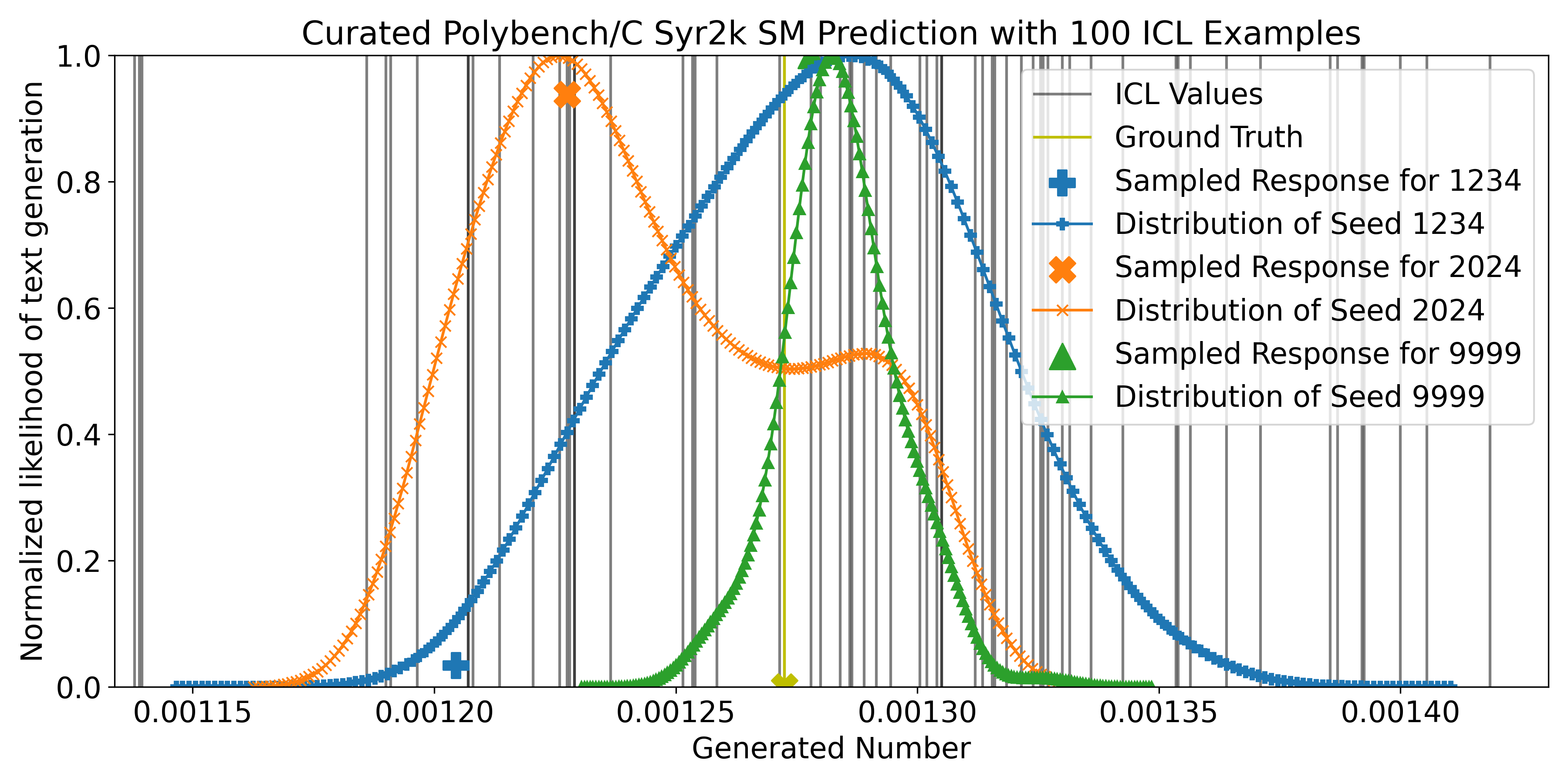

We examine whether in-context learning with Large Language Models (LLMs) can effectively address the challenges of High-Performance Computing (HPC) autotuning. LLMs have demonstrated remarkable natural language processing and artificial intelligence (AI) capabilities, sparking interest in their application across various domains, including HPC. Performance autotuning -- the process of automatically optimizing system configurations to maximize efficiency through empirical evaluation -- offers significant promise for enhancing application performance on larger systems and emerging architectures. However, this process remains computationally expensive due to the combinatorial explosion of configuration parameters and the complex, nonlinear relationships between configurations and performance outcomes. We pose a critical question: Can LLMs, without task-specific fine-tuning, accurately infer performance-configuration patterns by combining in-context examples with latent knowledge? To explore this, we leverage empirical performance data from real-world HPC systems, designing structured prompts and queries to evaluate LLMs' capabilities. Our experiments reveal inherent limitations in applying in-context learning to performance autotuning, particularly for tasks requiring precise mathematical reasoning and analysis of complex multivariate dependencies. We provide empirical evidence of these shortcomings and discuss potential research directions to overcome these challenges.