Publications

GALE: Leveraging Heterogeneous Systems for Efficient Unstructured Mesh Data Analysis

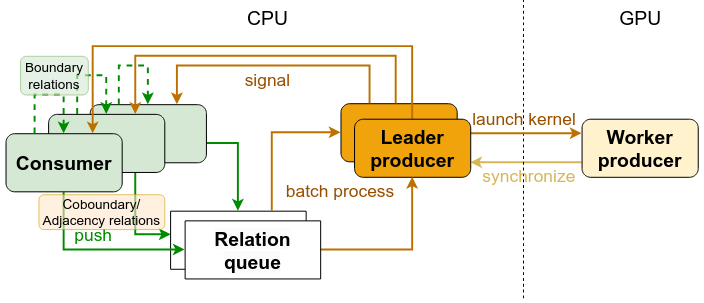

Unstructured meshes present challenges in scientific data analysis due to irregular distribution and complex connectivity. Computing and storing connectivity information is a major bottleneck for visualization algorithms, affecting both time and memory performance. Recent task-parallel data structures address this by precomputing connectivity information at runtime while ... the analysis algorithm executes, effectively hiding computation costs and improving performance. However, existing approaches are CPU-bound, forcing the data structure and analysis algorithm to compete for the same computational resources, limiting potential speedups. To overcome this limitation, we introduce a novel task-parallel approach optimized for heterogeneous CPU-GPU systems. Specifically, we offload the computation of mesh connectivity information to GPU threads, enabling CPU threads to focus on executing the visualization algorithm. Following this paradigm, we propose GALE (GPU-Aided Localized data structurE), the first open-source CUDA-based data structure designed for heterogeneous task parallelism. Experiments on two 20-core CPUs and an NVIDIA V100 GPU show that GALE achieves up to 2.7x speedup over state-of-the-art localized data structures while maintaining memory efficiency.

A Systemic Approach to Maximize Heterogeneous System Performance

Continuous increases in high performance computing (HPC) throughput have served as catalysts for industry and scientific advancement in countless manners that have fundamentally shaped our modern world. Our demands on compute resources continue to scale, but the limitations of Ahmdal’s law and Dennard scaling have proven increasingly difficult to overcome when approached solely through hardware or software design. Furthermore, many HPC applications fail to utilize the collective system’s performance, even on the most advanced supercomputers. However, ... the resurgence of AI in the industry has promoted an explosion of hardware and software codesign that have fueled massive improvements in GPU design and novel ASICs. These performance improvements are maximized on myriad heterogeneous systems by specially tuning applications. Mimicking these developments across the whole of computing will require similarly holistic approaches combining specialty hardware, software that caters its design to the greatest hardware strengths, and fine-tuning on individual systems to maximize performance. We use three distinct perspectives to address scalable system performance holistically. We analyze the impacts of liquid immersion cooling technologies on sustained application performance and energy efficiency. Next, we present a case study where intentional algorithmic redesign for GPU acceleration permits robust performance improvements that endure through multiple generations of hardware. We find that memory latency forms a primary bottleneck for GPU-accelerated performance and demonstrate how algorithm-specific optimizations can significantly improve performance over multiple architecture generations. Finally, we tie these concepts together through performance optimization techniques that respect software- and hardware-based performance constraints. We improve the re-usability of performance insights with novel transfer learning techniques that make performance optimization costs more predictable and more successful in the short term. Our insights demonstrate the necessity of systemic approaches for performance tuning in HPC.

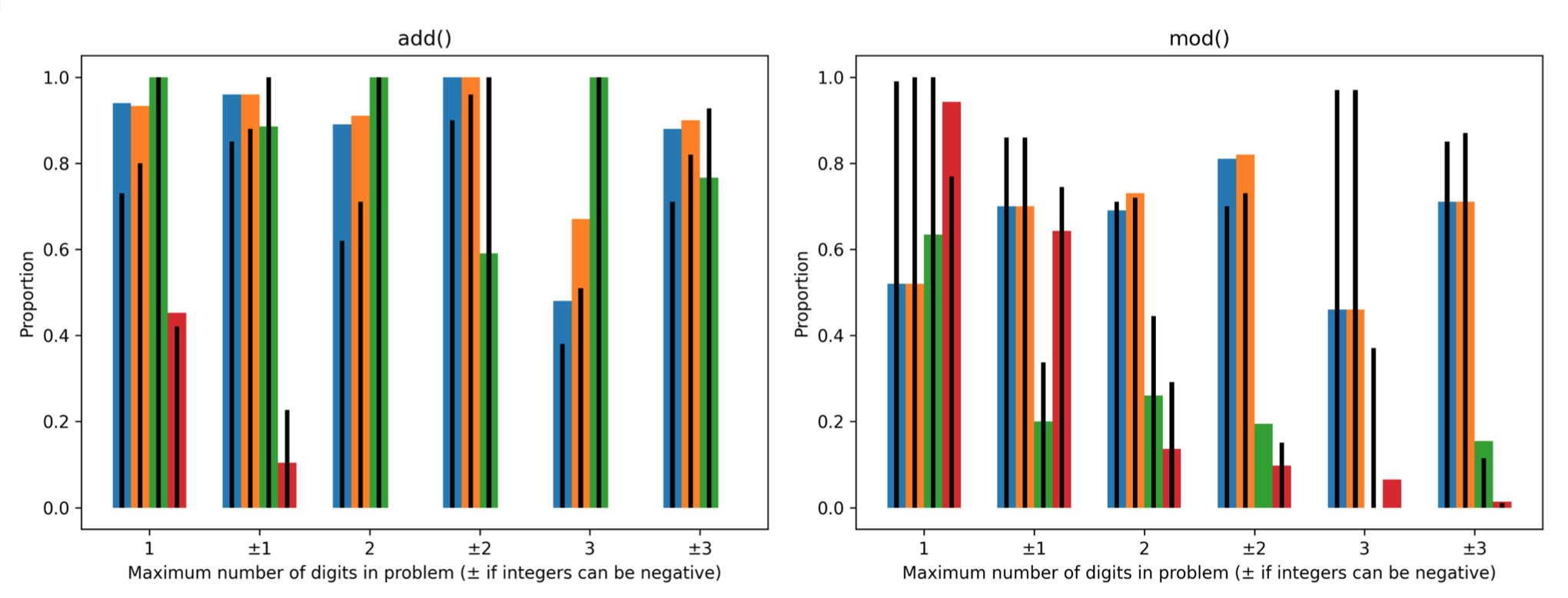

Is In-Context Learning Feasible for HPC Performance Autotuning?

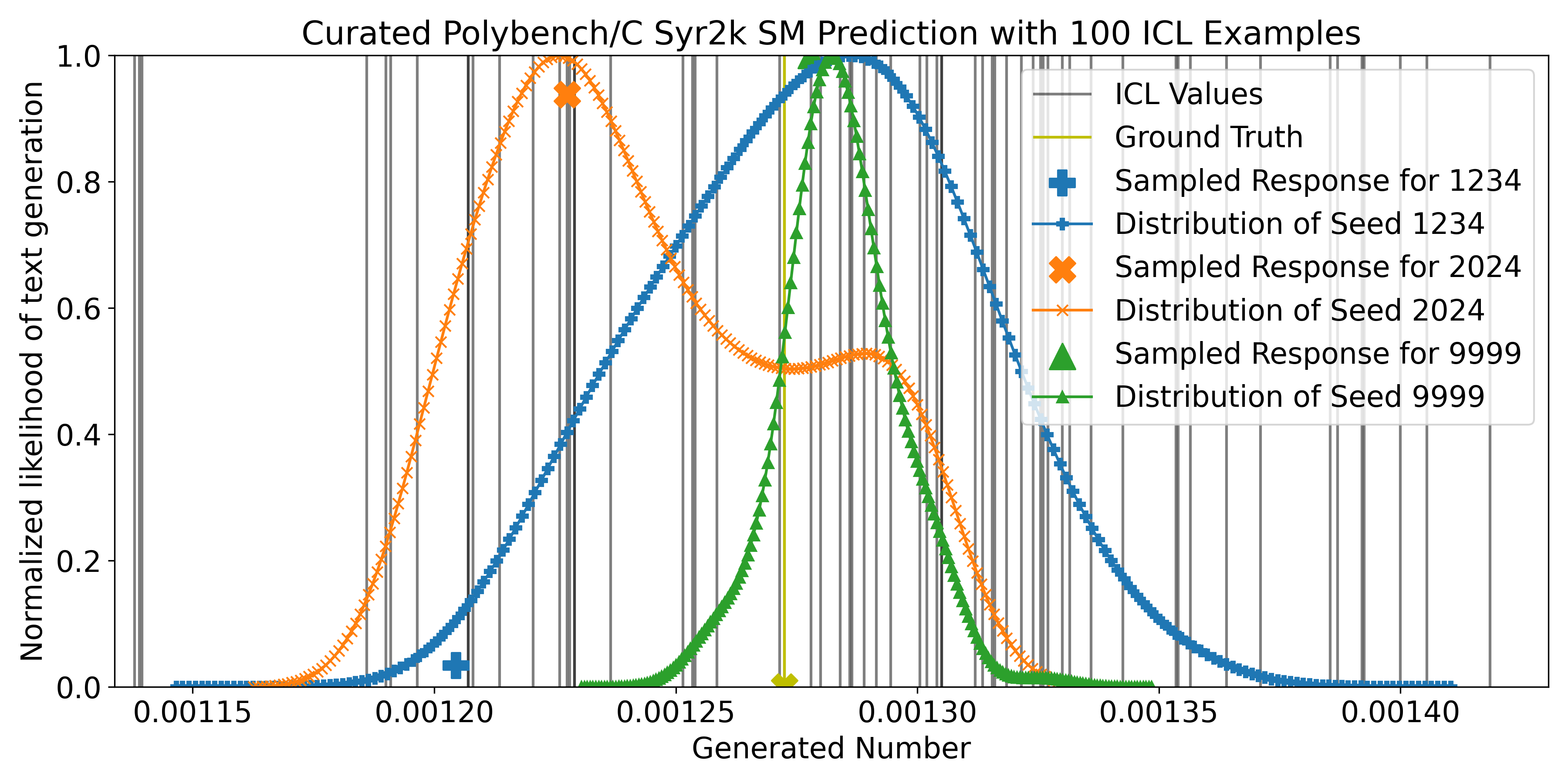

We examine whether in-context learning with Large Language Models (LLMs) can effective address the challenges of High-Performance Computing (HPC) autotuning. LLMs have demonstrated remarkable natural language processing and artificial intelligence (AI) capabilities, sparking interest in their application across various domains, including HPC. Performance autotuning -- the process of automatically optimizing system configurations to maximize efficiency through empirical evaluation -- offers significant promise for enhancing ... application performance on larger systems and emerging architectures. However, this process remains computationally expensive due to the combinatorial explosion of configuration parameters and the complex, nonlinear relationships between configurations and performance outcomes. We pose a critical question: Can LLMs, without task-specific fine-tuning, accurately infer performance-configuration patterns by combining in-context examples with latent knowledge? To explore this, we leverage empirical performance data from real-world HPC systems, designing structured prompts and queries to evaluate LLMs' capabilities. Our experiments reveal inherent limitations in applying in-context learning to performance autotuning, particularly for tasks requiring precise mathematical reasoning and analysis of complex multivariate dependencies. We provide empirical evidence of these shortcomings and discuss potential research directions to overcome these challenges.

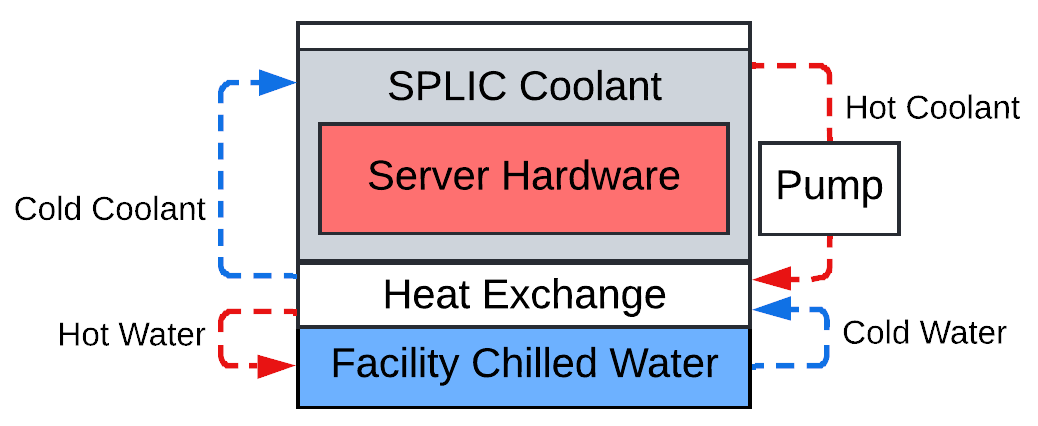

Thermal Behaviors in Liquid Immersion Cooling under Various Workloads: a Case Study

The growing need for energy-efficient computing has led to many novel system innovations, including liquid immersion cooling. While many myths about the technology have been dispelled, the actual impact of this cooling solution on thermal conditions in real computing scenarios remains under-reported and ... under-studied. In this work, we collate data from multiple system monitoring tools to perform case-study analyses of the thermal behaviors of immersed hardware, aiming to evaluate the effectiveness of liquid immersion cooling for high-performance and datacenter applications.

Poster for OMNI Internship @ Oak Ridge National Laboratory, 2024

Large Language Models (LLMs) capture a certain amount of world knowledge spanning many general and technical topics, including programming and performance. Without fine-tuning, the use of In-Context Learning (ICL) can specialize LLM outputs to perform complex ... tasks. In this work, we seek to demonstrate the regressive capabilities of LLMs in a performance modeling capacity. We find initial evidence that may limit LLM utility even after fine-tuning.

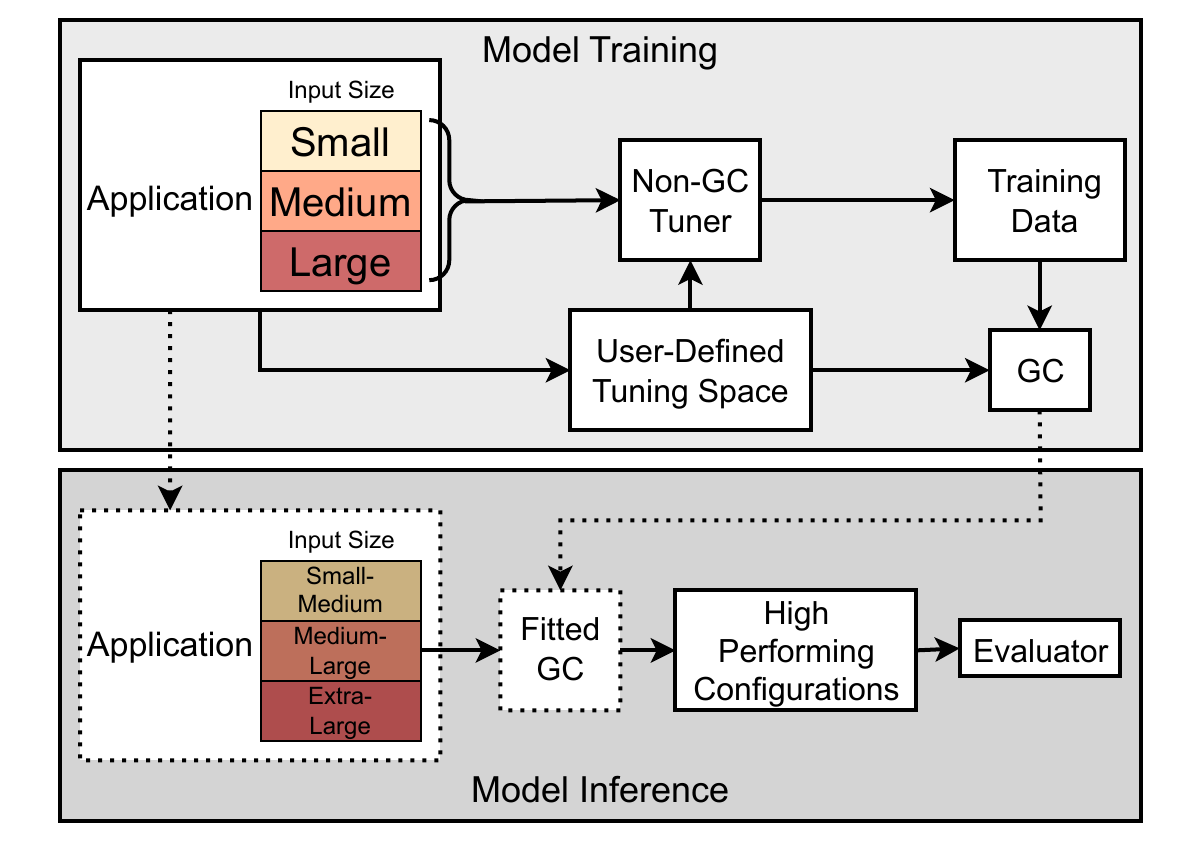

Transfer-learning-based Autotuning using Gaussian Copula

As diverse high-performance computing (HPC) systems are built, many opportunities arise for applications to solve larger problems than ever before. Given the significantly increased complexity of these HPC systems and application tuning, empirical performance tuning, such as autotuning, has emerged as a promising approach in recent years. Despite its effectiveness, autotuning is often a computationally expensive approach. ... Transfer learning (TL)-based autotuning seeks to address this issue by leveraging the data from prior tuning. Current TL methods for autotuning spend significant time modeling the relationship between parameter configurations and performance, which is ineffective for few-shot (that is, few empirical evaluations) tuning on new tasks. We introduce the first generative TL-based autotuning approach based on the Gaussian copula (GC) to model the high-performing regions of the search space from prior data and then generate high-performing configurations for new tasks. This allows a sampling-based approach that maximizes few-shot performance and provides the first probabilistic estimation of the few-shot budget for effective TL-based autotuning. We compare our generative TL approach with state-of-the-art autotuning techniques on several benchmarks. We find that the GC is capable of achieving 64.37% of peak few-shot performance in its first evaluation. Furthermore, the GC model can determine a few-shot transfer budget that yields up to 33.39× speedup, a dramatic improvement over the 20.58× speedup using prior techniques.

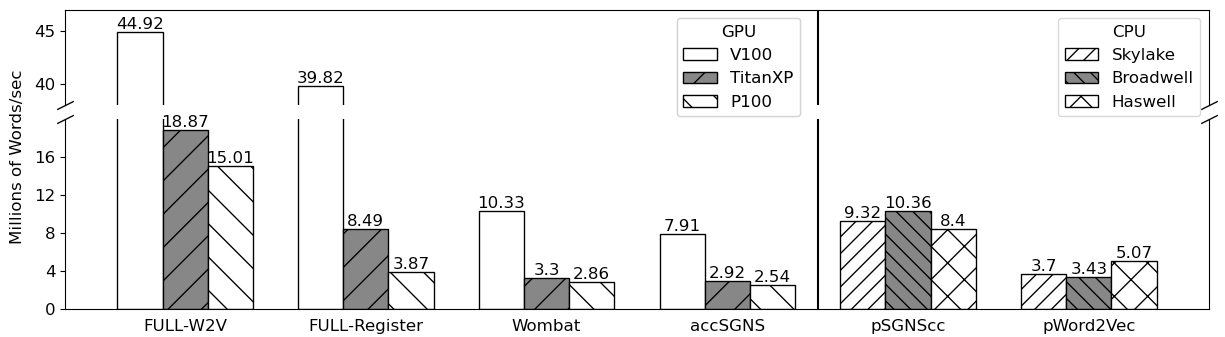

FULL-W2V: Fully Exploiting Data Reuse for W2V on GPU-Accelerated Systems

Word2Vec remains one of the highly-impactful innovations in the field of Natural Language Processing (NLP) that represents latent grammatical and syntactical information in human text with dense vectors in a low dimension. Word2Vec has high computational cost due to the algorithm's inherent sequentiality, intensive memory accesses, and the large vocabularies it represents. While prior studies have investigated technologies to explore parallelism and improve memory system performance, they struggle to effectively gain throughput on powerful GPUs. ... We identify memory data access and latency as the primary bottleneck in prior works on GPUs, which prevents highly optimized kernels from attaining the architecture's peak performance. We present a novel algorithm, FULL-W2V, which maximally exploits the opportunities for data reuse in the W2V algorithm and leverages GPU architecture and resources to reduce access to low memory levels and improve temporal locality. FULL-W2V is capable of reducing accesses to GPU global memory significantly, e.g., by more than 89%, compared to prior state-of-the-art GPU implementations, resulting in significant performance improvement that scales across successive hardware generations. Our prototype implementation achieves 2.97X speedup when ported from Nvidia Pascal P100 to Volta V100 cards, and outperforms the state-of-the-art by 5.72X on V100 cards with the same embedding quality. In-depth analysis indicates that the reduction of memory accesses through register and shared memory caching and high-throughput shared memory reduction leads to a significantly improved arithmetic intensity. FULL-W2V can potentially benefit many applications in NLP and other domains.

Subscribe via RSS